____________________________

* Corresponding author: dbegault@mail.arc.nasa.gov

Direct comparison of the impact of head tracking, reverberation,

and individualized head-related transfer functions on the spatial

perception of a virtual speech source

Audio Engineering Society 108

th

Conference, 19-22 February 2000, Paris

Durand R. Begault / NASA Ames Research Center *

Elizabeth M. Wenzel / NASA Ames Research Center

Alexandra S. Lee / San José State University

Mark R. Anderson / Raytheon STX Corporation

ABSTRACT

A study was performed using headphone-delivered virtual speech stimuli,

rendered via HRTF-based acoustic auralization software and hardware, and

blocked-meatus HRTF measurements. The independent variables were

chosen to evaluate commonly held assumptions in the literature regarding

improved localization: inclusion of head tracking, individualized HRTFs, and

early and diffuse reflections. Significant differences were found for azimuth

and elevation error; reversal rates; and externalization.

0. INTRODUCTION

A review of the literature related to perception of headphone-delivered 3-D sound

suggests that optimal localization performance and perceived realism result from

inclusion of factors in virtual acoustic synthesis that emulate our everyday spatial

hearing experiences. These include (1) head-tracked virtual stimuli [1-3], (2)

synthesis of a realistic diffuse field via auralization techniques [4-6], and (3) the use

of individualized (as opposed to non-individualized) head-related transfer functions

(HRTFs) ([7-9]). However, these conclusions have never been tested within a single

study that directly compared the perceptual effects of these factors to determine

their comparative advantages. The motivation of the current study was therefore to

essentially pit these factors against one another in a contest, using speech stimuli.

In reviewing the large number of studies that have evaluated virtual localization

accuracy, different types of stimuli and experimental paradigms are used, making

comparison difficult. In particular, noise stimuli probably bias performance

Begault, D. R., et al., “Direct comparison……”

2

differentially when compared to the use of more familiar everyday stimuli, such as

speech. For example, we cannot use any sort of real-world, cognitive reference

regarding the distance of a noise burst. Noise stimuli are particularly inapplicable to

reverberation studies, since the diffuse field of a sound decay bears similarity in the

time domain to frequency-weighted noise, resulting in “noise convolved with

decaying noise”. Such a stimulus would lack meaningful sensory cues from the

reverberant field.

Brief (3 s) segments of speech stimuli were used in the current experiment both

because reverberation was evaluated, and because of its obvious relevance to 3-D

audio applications such as teleconferencing. The trade-off in using speech for

evaluating localization accuracy is that the average speech spectrum does not

contain a significant level of acoustical power at those frequencies where the HRTF

yields elevation cues that can be used by a listener. For that reason, only eye-level

elevations were evaluated in the current study, as in a previous speech localization

study we had conducted [10].

Individualized HRTFs have been cited in the literature as improving localization

accuracy, increasing externalization, and reducing reversal errors [2, 9, 11]. Møller

et al. conducted a source-identification experiment using speech stimuli that

suggested that non-individualized HRTFs resulted in an increased number of

reversals, but had no effect on externalization; however, all of the conditions in that

study were made under reverberant conditions and without head-tracking [9].

The literature also indicates that reverberation, and even only a few “early

reflections” (or even attenuated delays) are sufficient to produce image

externalization [5, 12, 13]. In the current study, the experimental variables included

anechoic stimuli, stimuli with HRTF-filtered early reflections (0-80 ms), and stimuli

with “full auralization” reverberation (the same early reflections plus additional

simulation of the late reverberant sound field from 80 ms - 2.2 s).

2. SUBJECTS

Nine paid participants (4 female 5 male, age range 18-40, hearing ability 15 dB

HL) inexperienced with virtual acoustic experiments participated. They were not told

the purpose of the experiment.

Prior to the experiment, subjects were instructed how to make azimuth, elevation,

distance and realism judgments on the interactive, self-paced software interface

described below. For distance judgments, they were instructed to pay attention to

whether or not the sound seemed inside or outside of the head, and that the

maximum distance judgment possible on the graphic corresponded to “more than 4

inches outside the edge of the head.” Instructions were given on the computer

screen before each experimental trial that utilized head tracking requesting that the

subject move their head; they were not trained to move their head in any particular

manner. Subjects were encouraged to stop when fatigued, and were given breaks

between experimental blocks at their request. Subjects required three to four days to

complete the entire experiment

Begault, D. R., et al., “Direct comparison……”

3

3. EXPERIMENTAL DESIGN

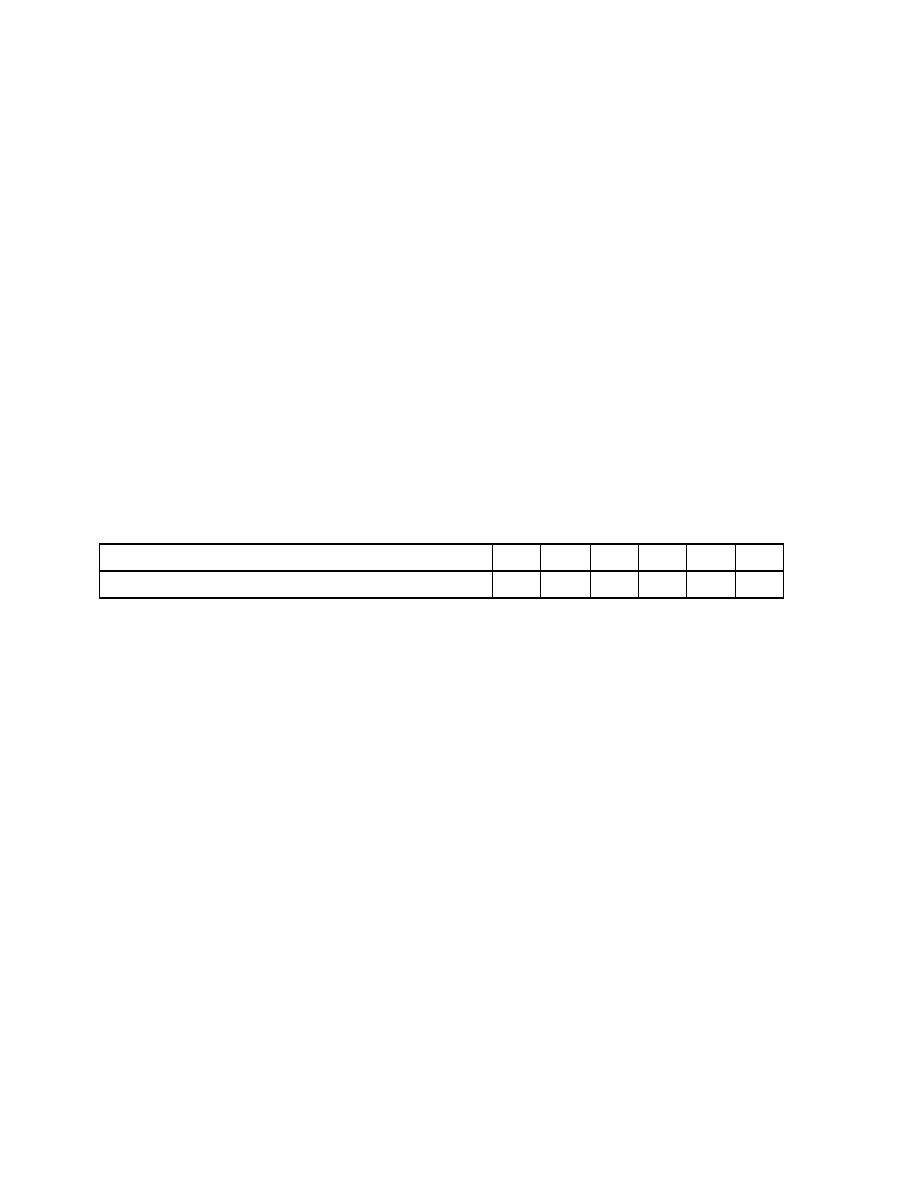

The experimental design shown in Figure 1 was developed to evaluate all

combinations of independent variables. “Anechoic”, “early reflection” and “full

auralization” refers to the level of diffuse field simulation used. “Individualized”

HRTFs were measured for each subject, while “generic” HRTFs were derived from

the Institut für Technische Akustik Kunstkopf from the University of Aachen (supplied

as part of the room modeling software). ”Tracking on-off” refers to whether or not the

direct sound HRTFs and (when applicable) the early reflection HRTFs were updated

in real time in response to head movement.

Each subject was run under each of the treatment combinations shown in the first

three rows of Figure 1, resulting in a total of 12 conditions. Only six azimuth positions

were used, all at eye level elevation: left and right 45 degrees; left and right 135

degrees; and 0 and 180 degrees. These positions represent pairs that lie on two

different “cones of confusion” and a pair directly on the median plane [14]. The

elements of each pair are similar in that the interaural time delay (ITD) is the same

for left 45 and 135 degrees, 0 and 180 degrees, and right 45 and 135 degrees. All

positions simulated were at eye level (0 degrees elevation). Each azimuth position

was evaluated 5 times in each block, resulting in 30 trials per block. The order of

blocks, azimuth positions, and speech stimuli were randomized, while the particular

combination of experimental treatments held constant for each block. Each trial took

approximately 10 seconds to complete.

4. STIMULI

4.1 Experimental hardware and software

Stimuli were monitored by subjects over headphones (Sennheiser HD-430) in a

sound proof booth, at a level of approximately 60 dBA. This level corresponds to a

normal speech level at a distance of 1 meter. A head-tracking device (Polhemus

FastTrak) was attached at all times to the subjects’ headphone. The head tracker

was interfaced via a tcp/ip socket connection with the simulation software and

hardware (Lake DSP “Headscape” and “Vrack” software, CP-4 hardware) updating

the stimuli in response to head movement at an update interval of 33 Hz (30 ms).

The end-to-end latency throughout the entire hardware system averaged 45.3 ms

(SD = 13.1 ms), as measured via a swing-arm apparatus (described in [15, 16]).

For simulating different azimuths, the virtual simulation turned the receiver about

their central axis to face different directions, rather than moving the sound source.

The hardware did not re-synthesize the stimuli in response to subject tilt or roll, only

to yaw (azimuth). Nevertheless, the simulated reverberation always included a full 3-

D simulation.

Custom software drove the experimental trials and data collection, and generated

playback of speech recordings from a digital sampler (randomized 3 s anechoic

Begault, D. R., et al., “Direct comparison……”

4

female and male voice segments from the Archimedes test CD [17]). The software

also coordinated the Lake Vrack program, the sound generator, the head tracking

device, and the stimuli generation.

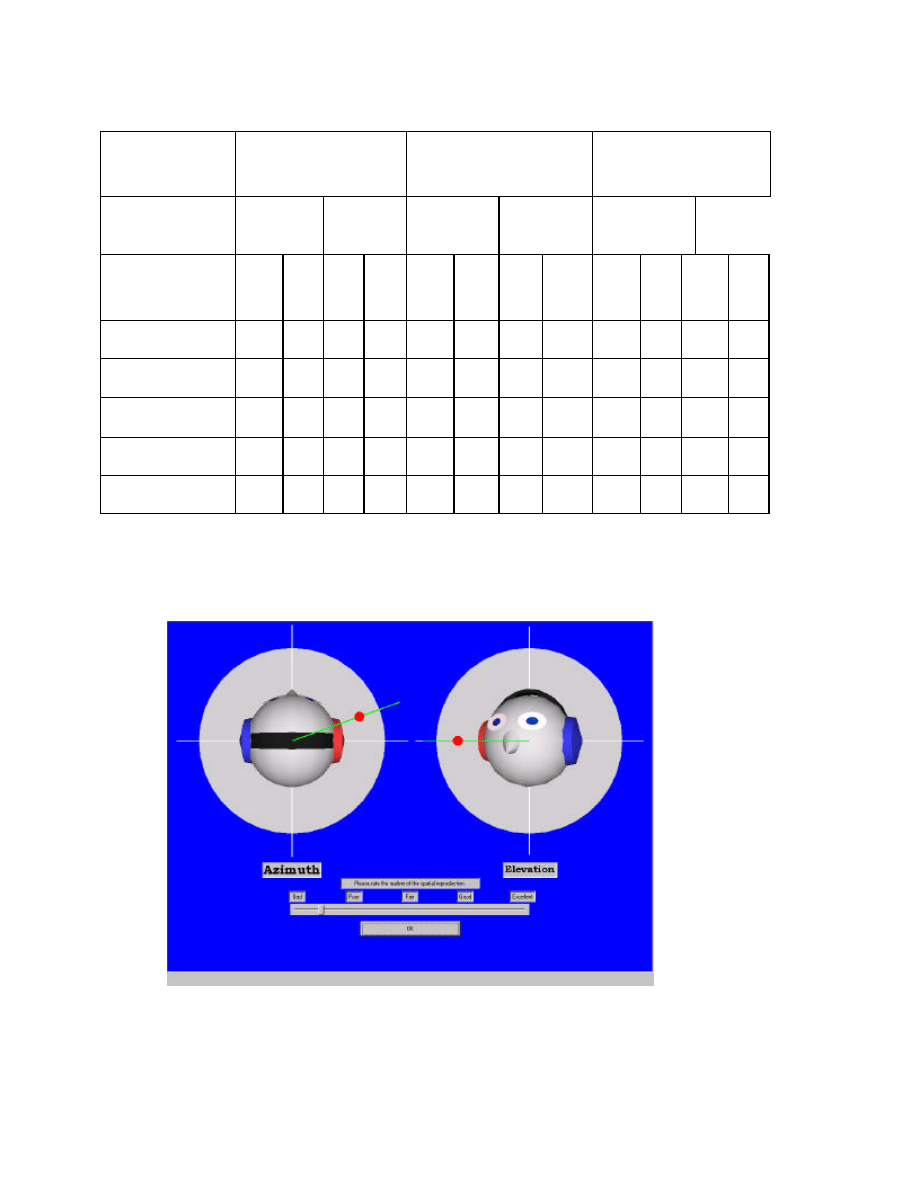

Subjects indicated their responses using an interactive graphic shown in Figure

2. The details of the interface were described previously in [3]

.

Subjects were

required first to indicate the azimuth and the distance on the left panel, and then the

elevation in the right panel of the display, and then finally to adjust the slider at the

bottom to indicate “perceived realism”. Subjects were forced to make all judgments

before they could proceed to the next trial.

The distance of the outer circle from the center of the head was twice the radius

from the center to the edge of the head in order to prevent biasing the

externalization judgments. For example, a very large head relative to the available

area on the display would probably yield more inside-the-head localization

judgments. The “perceived realism” was indicated on a continuous scale, but

indicated the words “bad”, “poor”, fair”, “good” and “excellent” at locations

corresponding to a 0 – 4 rating scale. No special instructions were given on how to

interpret ”realism” or what specifically to listen for.

4.2 HRTF measurement

Before starting the experiment, subjects had their HRTFs measured using a

modified Crystal River Engineering “Snapshot” system. This system is based a

blocked-meatus measurement technique (see, e.g., [18]) and has been used in our

previous experiments. The HRTF is measured iteratively via a single speaker, with

the subject moving on a rotating chair for each measurement; the speaker is moved

vertically along a pole to obtain a set of azimuth measurements at a specific

elevation. Room reflections are eliminated from the measurement, and

compensation for subject movement is made in the calculation of interaural time

delays.

A software script (Matlab from The MathWorks, Inc.) guided the experimenter in

measuring the HRTF map at 30 degree azimuth increments, at 5 elevations: -36, -

18, 0, 18, 36 and 54 degrees relative to eye level. A head-tracking device was

monitored by the experimenter to position the subject for each measurement. Golay

code pairs are used to obtain the raw impulse response; subsequently, a diffuse field

equalization that compensates for the headphone, microphone and loudspeaker

transfer functions is applied. The measurements are eventually processed into a

HRTF “map”, consisting of an array of 256 point, minimum-phase impulse responses

at a sample rate of 44.1 kHz. Further details will appear in a later version of this

paper.

4.3 Reverberation simulation

A room prediction-auralization software package (CATT-Acoustic) was used to

generate both an individualized and a generic binaural version of an existing multi-

Begault, D. R., et al., “Direct comparison……”

5

purpose performance space. This space has a volume of about 1000 m

3

(8.9 m

width, 14.3 m length, 7.9 m height) and is essentially a rectangular box with gypsum

board mounted on stud walls, a cement ceiling, and a wooden parquet floor with

seating. Velour curtains are on the two opposite long sides of the hall. The modeled

source and receiver were positioned asymmetrically in the room 5.3 m apart, 1 m off

the center line of the room and with the source 4.7 m off of the back wall. The source

directivity was specified in octave bands based on a model of the human voice. A

total of 10,088 rays were calculated using a hybrid cone tracing technique (see [19,

20]).

The acoustical characteristics of the modeled room in the experiment were

initially compared to measurements made in the real room to verify the match

between significant acoustical parameters such as octave-band reverberation times

and early reflection arrivals (see [21]). The agreement between the modeled and real

room was within 0.1 s in each octave band from 125 Hz- 4 kHz, with a mid-band RT

of 0.9 s. However, the existing space was considered overly dry (unreverberant) for

purposes of the current experiment; the reverberation for the full auralization was

meant to be obvious to the non-expert subjects. Consequently, the absorptive

materials in the virtual room were adjusted to achieve a mid-band reverberation time

of 1.5 s, partly by removing seats in the room model. The resulting reverberation

times were as follows:

Octave band center frequency (Hz)

125

250 500

1k

2k

4k

Reverberation time T-30 (s)

2.2

1.9

1.5

1.5

1.5

1.4

The early reflections out to 80 ms contained no noticeable echoes, and were

uniformly dense from all directions after about 12 ms.

A customized Matlab script took the complete measured HRTF map made by

Snapshot and produced an interpolated, up-sampled (44.1 – 48 kHz) version for

implementation in the room modeling program. The room modeling program then

generated 256 binaural impulse responses of the first 80 ms of the room response,

representing 1.5 degree increments of listener motion relative to the source. In

addition, two binaural impulse responses were generated representing the diffuse

field from 80 ms – 2 s. The early reflection binaural impulse responses were updated

in real time for both the anechoic and early reflection conditions by the Lake

hardware/software for real-time, low-latency convolution. The anechoic condition

was achieved by using only the part of the impulse response representing the direct

arrival. The “full auralization” condition included the late diffuse reverberation

response (80 ms-2.2 s), which did not vary with head motion.

5. RESULTS

A 2 * 2 * 3 (head tracking on/off * generic/individual HRTF type * reverberation

treatment) univariate repeated measures analysis of variance (ANOVA) was

conducted for each of the five dependent measures: azimuth error, elevation error,

Begault, D. R., et al., “Direct comparison……”

6

front-back reversal rate, externalization, and subjective rating of sound realism. An

alpha level of .05 was used for all statistical tests, unless otherwise indicated. The

Geisser-Greenhouse correction was used to adjust for assumed violations of

sphericity when testing repeated measures effects involving more than two levels.

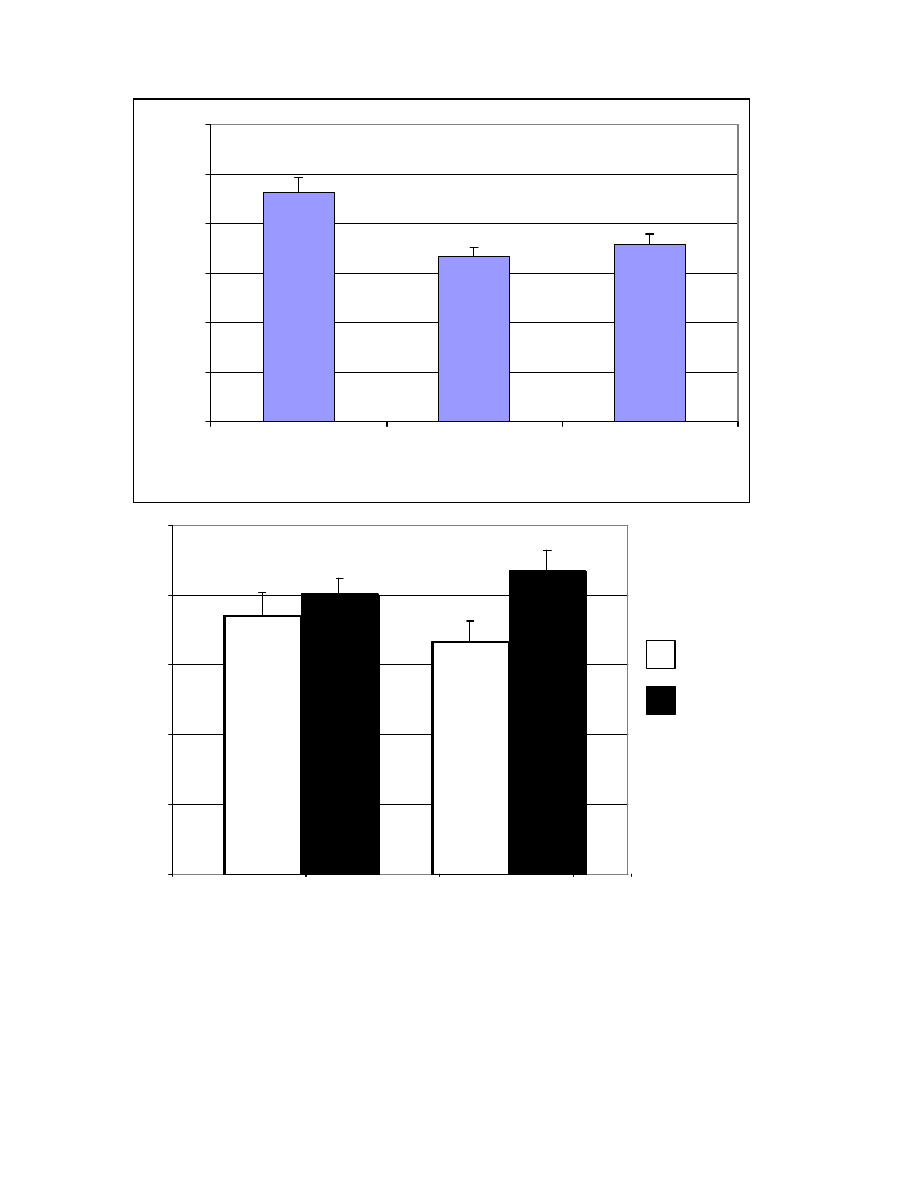

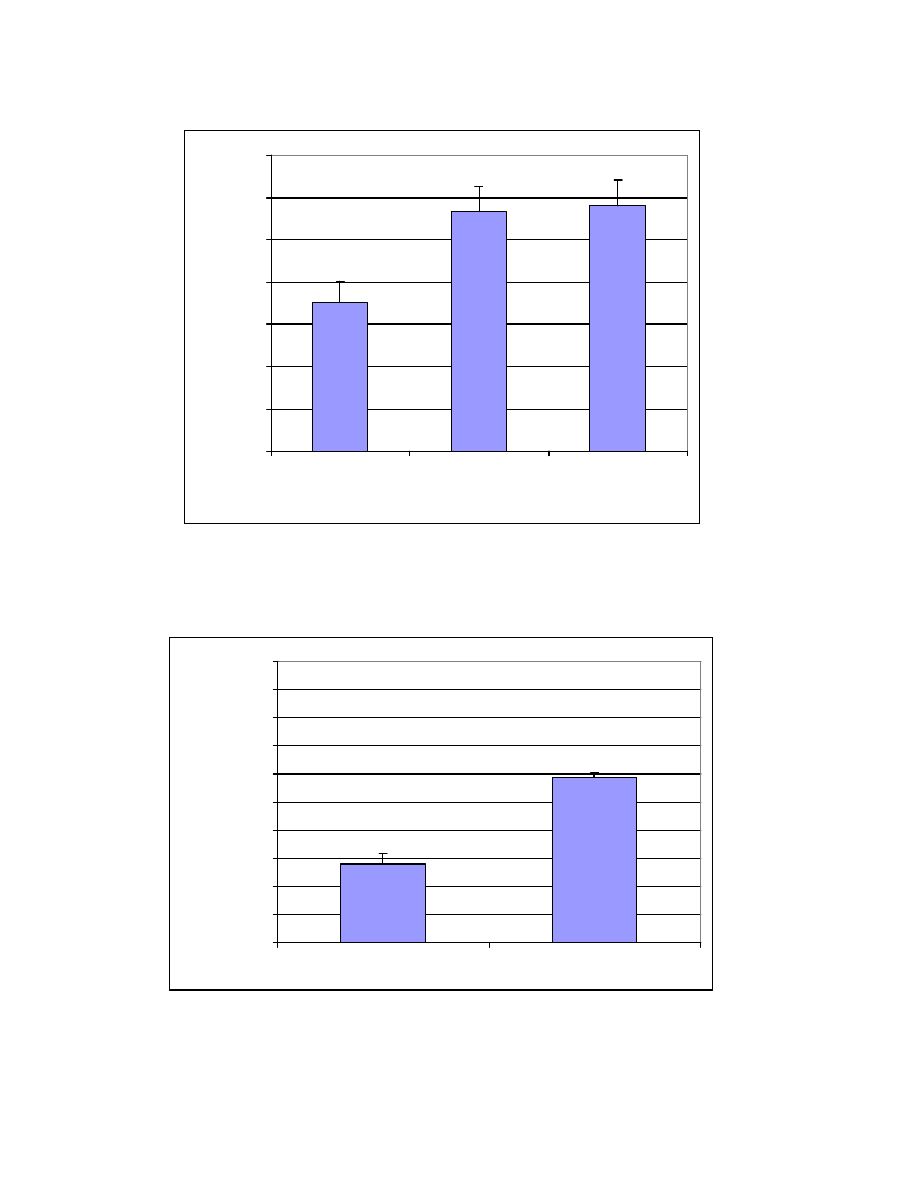

5.1 Azimuth error

Azimuth error was defined as the unsigned deviation, in degrees, of each

azimuth judgment from the target azimuth location, corrected for frontal plane and

median plane reversals. A significant main effect was found for reverberation type,

even after reducing the degrees of freedom by applying the Geisser-Greenhouse

correction: F(1.38, 11.05) = 5.19, p = .035; see Figure 3, top. The main effects for

head tracking and HRTF type were non-significant.

A post-hoc test (Scheffe) found no significant difference between the early

reflection and full auralization conditions. An additional post-hoc test of the combined

reverberant (early reflection and full auralization) conditions and the anechoic

condition was also non-significant, F(1, 8) = 6.08, p > .05, perhaps due to the

conservative nature of Scheffe test. Despite this lack of significance in the post-hoc

test, an estimation of partial ù

2

indicated that the presence of reverberation in the

stimuli, excluding all other treatment effects, had a large effect on azimuth error

(partial ù

2

= .22)

1

Specifically, the reverberant conditions resulted in less azimuth

error (mean = 17.32, SD = 5.82) than anechoic conditions (mean = 23.19, SD =

9.07).

Although non-significant, the effect of head tracking on azimuth error, excluding

all other treatments, was moderate, having an estimated partial ù

2

of .14. Head-

tracked stimuli resulted in a smaller azimuth error (mean =17.64, SD =7.96)

compared to non-head--tracked stimuli (mean = 20.91, SD = 6.83).

The analysis also revealed a significant two-way interaction between head

tracking and HRTF for azimuth error, F(1, 8) = 20.07, p = .002; see Figure 3

(bottom). Subsequent post-hoc tests of the simple effects of head tracking in the

individualized and generic HRTF conditions (Bonferroni, alpha = .0167) indicated no

significant effect of head tracking for either HRTF type, perhaps due to the

conservative nature of the Bonferroni test. Although non-significant, an estimated

partial ù

2

of .30 indicates that the effect of head tracking in non-individualized HRTF

conditions was large, independent of all other treatments. For non-individualized

HRTF conditions, head-tracked stimuli resulted in smaller azimuth error (mean =

16.70, SD = 7.71) than no head tracking (mean = 21.74, SD = 7.82).

1

Partial ù

2

is a measure of relative treatment magnitude; it reflects the proportion of total variability

explained by an experimental treatment, independent of all other treatments [22]. In general, ù

2

values on the order of .01, .06, and .15 indicate small, medium and large treatment effects,

respectively.

Begault, D. R., et al., “Direct comparison……”

7

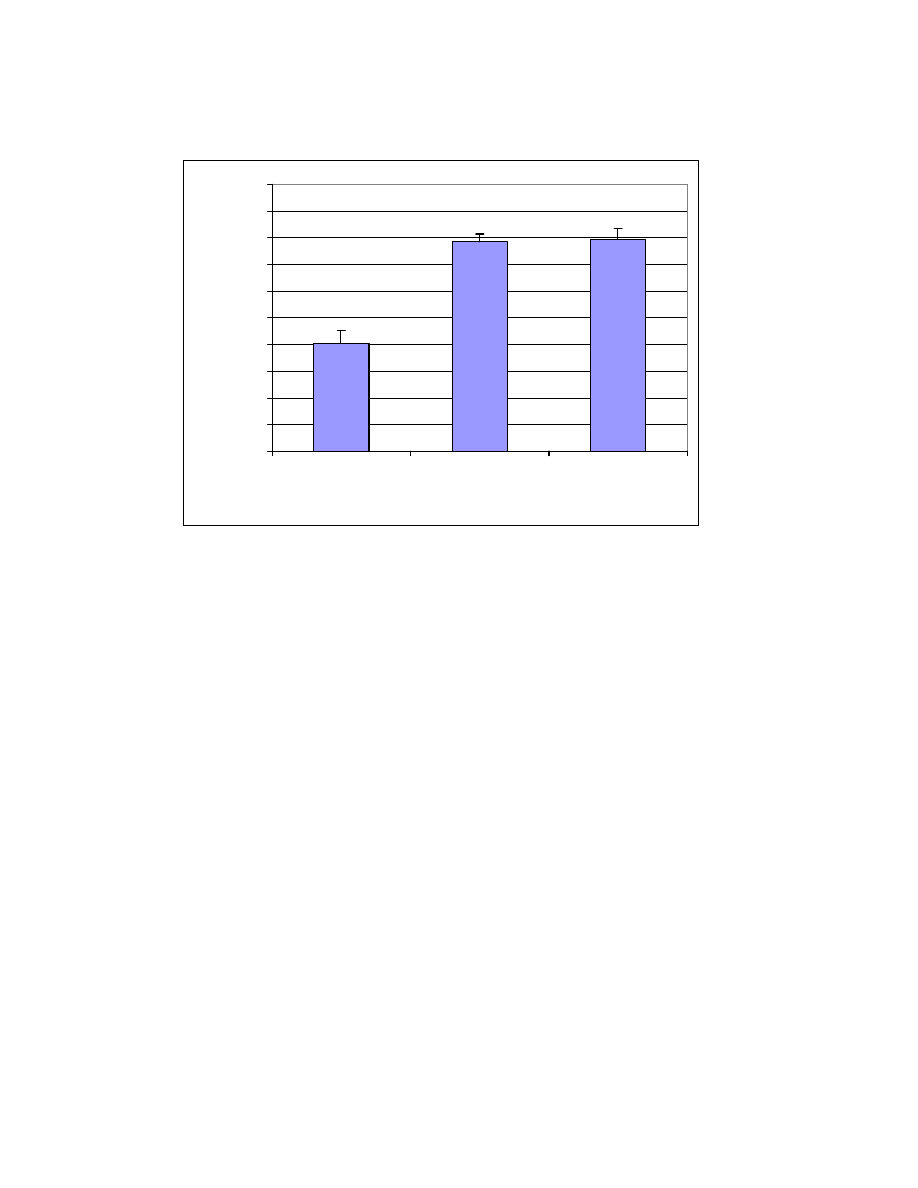

5.2 Elevation error

Elevation error was defined as the unsigned deviation, in degrees, of an

elevation judgment from an eye-level target (“0 degrees elevation”). A significant

main effect was found for reverberation, F(1.20, 9.57) = 5.15, p = .043, applying the

Geisser-Greenhouse correction; see Figure 4. No significant main effects or

interactions were found for head tracking or HRTF type.

In contrast to its facilitating effect in reducing azimuth error, the presence of

reverberation in the stimuli increased the magnitude of elevation errors (mean =

28.67, SD = 17.76), compared to anechoic conditions (mean = 17.64, SD = 14.60).

A post-hoc comparison (Bonferroni, alpha = .0167) revealed no significant difference

in elevation error between early and full auralization conditions. In addition, a

Scheffe test found no significant difference between the combined reverberant

conditions and the anechoic conditions. However, an estimated partial ù

2

of .21

indicates that the effect of reverberation on elevation error is rather large compared

to the anechoic condition, with reverberation increasing elevation error.

5.3 Reversal rates

The ANOVA indicated that head tracking significantly reduced reversals (front-

back and back-front confusion rates) compared to stimuli without head tracking,

F(1,8) = 31.14, p= .001; see Figure 5. The overall mean reversal rate for head-

tracked conditions was 28% (SD = 25%) compared to the 59% (SD = 12%) reversal

rate for non-head-tracked conditions. No other treatments or interactions yielded

significant effects on reversal rates. (A separate analysis of front-back and back-front

confusions has not been made at this time, but will be presented in a future paper).

5.4 Externalization

A sound is externalized if its location is judged to be outside of the head. For this

analysis, the cut-off point for treating a judgment as “externalized” was set to > 5

inches instead of > 4 inches in order to yield a conservative estimate that eliminated

judgments perceived at the edge of the head (“verged cranial”; see [23]). Note that

the unit of an “inch” is relative to the graphic shown in Figure 2, and does not

represent a literal judgment in inches by the listener. The edge of the head is set at 4

inches, and the maximum distance judgment possible by the subject was 8 inches.

A significant main effect of reverberation was found for the proportion of

externalized distance judgments, F(1.43, 11.43) = 13.43, p = .002 (Geisser-

Greenhouse correction); see Figure 6. Subjects externalized judgments at a mean

rate of 79% (SD = 23%) under the combined reverberant conditions, compared to

40% (SD = 29%) under the anechoic condition. No other treatments or interactions

Begault, D. R., et al., “Direct comparison……”

8

yielded significant effects for externalized stimuli. A post-hoc test (Bonferroni, alpha

= .0167) found no significant difference between the two reverberant conditions, but

the reverberation conditions combined (Scheffe test) resulted in significantly more

externalized judgments than the anechoic conditions, F(1,8) = 18.10, p < .05.

5.5 Perceived realism

Listeners were asked to rate the perceived realism of each stimulus on a

continuous scale that was subsequently encoded from 0 (least realistic) to 4 (most

realistic). No significant main effects or interactions were found. Realism ratings for

each of the 12 conditions, averaged over all nine participants, varied only from 2.42

and 2.97, with an overall mean realism rating of 2.71 (SD = .55) on the 0-4 scale.

This lack of ability suggests that the participants did not differentiate among

conditions based on perceived realism, or that they did not have a common

understanding of what “realism” meant. It can be assumed, then, that all 12

conditions were equally, moderately, “real” to the participants.

6. DISCUSSION

It is possible to evaluate these results in light of system requirements for

improved virtual acoustic simulation. Overall, these results would seem to indicate

that stimuli which include reverberation will yield lower azimuth errors and higher

externalization rates (here, by a ratio of about 2:1), but at the sacrifice of elevation

accuracy. The inclusion of head tracking will significantly reduce reversal rates, also

by a ratio of about 2:1, but does not improve localization accuracy or externalization.

Except for the interaction of head tracking and HRTF for azimuth error, there is no

clear advantage to including individualized HRTFs for improving localization

accuracy, externalization or reversal rates, within a virtual acoustic display of

speech.

The fact that individualized HRTFs did not significantly increase azimuth

accuracy is not surprising since most of the spectral energy of speech is in those

frequencies where ITD cues are more significant than spectral cues. Møller et al.

also found that individualized HRTFs gave no advantage for localization accuracy for

speech [9]. Further analyses will be conducted to examine the correlation between

the magnitude of the ITD difference between individual and generic HRTFs, versus

individual localization performance. One might expect a strong correlation between

localization error and the ITD difference magnitude.

It has been reported previously that reversals are mitigated by individualized

HRTFs [7-9, 24, 25]. For instance, for noise stimuli in a non-dynamic simulation, an

almost 3:1 increase in reversals (11% to 31%) was reported when using non-

individualized HRTFs [7, 25]. This is not the case here for speech stimuli. For non-

dynamic stimuli, the mean reversal rate found here (59%) was much higher than a

previous study using speech stimuli (37%) [10]. This is probably because the current

study had one-third of the total stimuli on the median plane, where differences in

Begault, D. R., et al., “Direct comparison……”

9

spectral cues are minimal between front and back. Only one-twelfth of the stimuli in

the previous study were on the median plane.

Previous studies have reported on the problem of inside-the-head localization

and the externalization advantage of using reverberation [5, 13, 26]. The current

study indicates that only the presence of 80 ms of early reflections, as opposed to a

full auralization out to 1.5 s, is sufficient. The early reflections cause a lowering of the

interaural cross-correlation of the binaural signal over time as the speech is voiced,

relative to the anechoic stimuli [27]. It is likely that this increased differentiation of the

binaural signal over time is responsible for the externalization effect, as opposed to

the cognitive recognition of a room.

It was surprising that the presence of reverberation caused the accuracy of

azimuth estimation to improve by approximately 5 degrees. Typically, reverberation

introduces a smearing effect in the form of image broadening that would make the

loci of the localized image less precise. On the other hand, non-externalized

responses are not actually “localized” in the normal sense; it is possible that sounds

heard within the head are less precisely localized, and that localization resolution

improves beyond a certain distance. There could also have been a response bias; it

may have been easier on the graphic response screen to represent a perceived

azimuth more accurately when the distance judgment had increased.

Elevation biases were observed previously for virtual speech stimuli using non-

individualized HRTFs [10, 28]. Particularly for sound sources at 0 degrees azimuth

and elevation, virtual acoustic stimuli (as well as dummy head recordings) are

frequently perceived as located upwards, within or at the edge of the head, when

auditioned through headphones. We have not yet fully analyzed the elevation data

presented here in detail, but we can informally note that the bias is upwards as was

previously reported [10, 28]. There is no explanation for this phenomenon at this

point.

Head-tracking did not significantly increase externalization rates, nor did it yield

more accurate judgments of azimuth. Head-tracking primarily served to eliminate

reversals, a phenomenon explained by the differential integration of interaural cues

over time as the cone of confusion is resolved by head motion [27]. These findings

contrast previous results indicating that head movements enhance source

externalization [13, 29, 30]. In the post-hoc analysis of the data presented here,

there was a strong effect for head tracking found for azimuth error, with head-tracked

stimuli resulting in about a 3-degree improvement. A future version of this paper will

examine head movement data to determine any correlations between accuracy and

the characteristics of head movement used by each listener. The fact that the stimuli

were only three seconds long could have been a contributing factor.

The perceived realism could have been affected by many factors. No instructions

were given, perhaps causing subjects to utilize different criteria that all resulted in a

relatively “neutral” judgment. It was surprising that full auralization with head tracking

was not judged as significantly more realistic than anechoic conditions. It may simply

be that realism is difficult to associate with a three-second segment of speech in a

laboratory simulation.

Begault, D. R., et al., “Direct comparison……”

10

In a future version of this paper, a more extensive analysis of the data will be

presented that will include reference to individual differences amongst the subjects,

an analysis of head tracking, and an exploration of the data for specific azimuths.

This latter issue is important since the proportion of median-plane stimuli in the

present study was large. This should be taken into account when comparing our

localization and reversal findings to previous studies.

ACKNOWLEDGEMENTS

Many thanks are due to Jonathan Abel and Kevin Jordan for their assistance in

the completion of this work. This paper was partially supported by NASA-San Jose

State University co-operative research grant NCC 2-1095.

REFERENCES

[1] Wightman, F.L. and Kistler, D.J., "The importance of head movements for

localizing virtual auditory display objects", in Proceedings of the 1994 International

Conference on Auditory Displays, Santa Fe, N.M. (1995).

[2] Wightman, F.L. and Kistler, D.J., "Factors affecting the relative salience of sound

localization cues", in Binaural and Spatial Hearing in Real and Virtual

Environments, R. Gilkey and T. Anderson, Eds., (Lawrence Earlbaum, Mahwah,

N.J., 1997).

[3] Wenzel, E.M., "Effect of increasing system latency on localization of virtual

sounds", in Audio Engineering Society 16th International Conference on Spatial

Sound Reproduction, (1999).

[4] Lehnert, H. and Blauert, J., "Principals of Binaural Room Simulation", Applied

Acoustics, vol. 36, pp. 259-91 (1992).

[5] Begault, D.R., "Perceptual effects of synthetic reverberation on three-

dimensional audio systems", Journal of the Audio Engineering Society, vol. 40, pp.

895-904 (1992).

[6] Kleiner, M., Dalenbäck, B.-I., and Svensson, P., "Auralization-an overview",

Journal of the Audio Engineering Society, vol. 41, pp. 861-875 (1993).

[7] Wenzel, E.M., Arruda, M., Kistler, D.J. and Wightman, F.L., "Localization using

non-individualized head-related transfer functions", Journal of the Acoustical

Society of America, vol. 94, pp. 111-123 (1993).

[8] Wightman, F.L., Kistler, D. J., and Perkins, M. E., "A new approach to the study

of human sound localization", in Directional Hearing, W. Yost and G. Gourevitch,

Eds., (Springer Verlag, New York, 1987).

[9] Møller, H., Sørensen, M. F., Jensen, C. B., and Hammershøi, D., "Binaural

technique: do we need individual recordings?", Journal of the Audio Engineering

Society, vol. 44, pp. 451-469 (1996).

Begault, D. R., et al., “Direct comparison……”

11

[10] Begault, D.R. and Wenzel, E. M., "Headphone Localization of Speech", Human

Factors, vol. 35, pp. 361-376 (1993).

[11] Wenzel, E.M. and Foster, S.H., "Perceptual consequences of interpolating

head-related transfer functions during spatial synthesis", in Proceedings of the

ASSP (IEEE) Workshop on Applications of Signal Processing to Audio and

Acoustics, October 17-20, New Paltz, NY, (1993).

[12] Laws, P., "Entfernungshören und das Problem der Im-Kopf-Lokalisiertheit von

Hörereignissen [Auditory distance perception and the problem of "in-head

localization" of sound images], Acustica, 29, pp. 243-259 (NASA Technical

Translation TT-20833) (1973) .

[13] Durlach, N. I., Rigopulos, A., Pang, X. D., Woods, W. S., Kulkkarni, A., Colburn,

H. S., and Wenzel, E., "On the externalization of auditory images", Presence:

Teleoperators and Virtual Environments, vol. 1, pp. (1992).

[14] Mills, W., "Auditory localization", in Foundations of Modern Auditory Theory,

J.V. Tobias, Ed. (Academic Press, New York, 1972).

[15] Wenzel, E.M., "The impact of system latency on dynamic performance in virtual

acoustic environments", in Proceedings of the 15th International Congress on

Acoustics and 135th Meeting of the Acoustical Society of America, Seattle, WA,

(1998).

[16] Adelstein, B.D., Johnston, E.R., and Ellis, S., "Dynamic response of

electromagnetic spatial displacement trackers", Presence: Teleoperators and

Virtual Environments, vol. 5, pp. 302-318 (1996).

[17] Bang and Olufsen, Music for Archimedes, compact disc B&0 101. 1992.

[18] Møller, H., Sørensen, M. F., Hammershøi, D., and Jensen, C. B., "Head-related

transfer functions of human subjects", Journal of the Audio Engineering Society,

vol. 43, pp. 300-321 (1995).

[19] Dalenbäck, B.-I., "Verification of prediction based on randomized tail-corrected

cone-tracing and array modeling", in Proceedings of the 137th meeting of the

Acoustical Society of America, 2nd Convention of the European Acoustics

Association (Forum Acusticum 99) and the 25th German DAGA Conference, Berlin

(1999).

[20] Dalenbäck, B.-I., A new model for room acoustic predicition and absorption, .

Dissertation, Chalmers University of Technology (1995).

[21] Begault, D.R. and Abel, J.S., "Studying room acoustics using a monopole-

dipole microphone array", in 16th International Congress on Acoustics and 135th

Meeting of the Acoustical Society of America, Seattle, WA, (1998).

[22] Keppel, G., Design and Analysis: a Researcher's Handbook, 3

rd

ed. (Prentice-

Hall, Upper Saddle River, NJ, 1991).

[23] Plenge, G., "On the difference between localization and lateralization", Journal

of the Acoustical Society of America, vol. 56, pp. 944-951 (1974).

[24] Weinrich, S., "The problem of front-back localization in binaural hearing", in

Tenth Danavox Symposium on Binaural Effects in Normal and Impaired Hearing,

Denmark, (1982)..

Begault, D. R., et al., “Direct comparison……”

12

[25] Wightman, F.L. and Kistler, D.J., "Headphone simulation of free-field listening.

II: Psychophysical validation", Journal of the Acoustical Society of America, vol. 85,

pp. 868-878 (1989).

[26] Toole, F.E., "In-head localization of acoustic images", Journal of the Acoustical

Society of America, vol. 48, pp. 943-949 (1970).

[27] Blauert, J., Spatial hearing: The psychophysics of human sound localization

(MIT Press, Cambridge, 1983).

[28] Begault, D.R., "Perceptual similarity of measured and synthetic HRTF filtered

speech stimuli", Journal of the Acoustical Society of America, vol. 92, pp. 2334

(1992).

[29] Wenzel, E.M., "What perception implies about implementation of interactive

virtual acoustic environments", in Audio Engineering Society 101st Convention, Los

Angeles, preprint 4353 (1996).

[30] Loomis, J.M., Hebert, C., and Cincinelli, J.G., "Active localization of virtual

sounds", Journal of the Acoustical Society of America, vol. 88, pp. 757-1764 (1990).

Begault, D. R., et al., “Direct comparison……”

13

REVERB

TYPE

anechoic

early reflections

full auralization

HRTF

USED

individual generic

individual

generic

Individual

generic

HEAD

TRACKING

on

off

on

off

on

off

on

off

on

off

on

off

externalization

•

•

•

•

•

•

•

•

•

•

•

•

reversals

•

•

•

•

•

•

•

•

•

•

•

•

Azimuth

•

•

•

•

•

•

•

•

•

•

•

•

Elevation

•

•

•

•

•

•

•

•

•

•

•

•

Realism

•

•

•

•

•

•

•

•

•

•

•

•

FIGURE 1. Experimental conditions by block (top three rows); dependent variables

shown in 1

st

column in italics.

FIGURE 2. Response screen graphic. Subjects indicate azimuth and distance on

left view; elevation on right view; perceived realism on slider.

Begault, D. R., et al., “Direct comparison……”

14

0

5

10

15

20

25

30

anechoic

early reflections

full auralization

reverberation treatment

Unsigned azimuth error (degrees)

FIGURE 3.Azimuth error: significant main effect (top) and interaction (below). Mean

values and standard error bars shown.

0

5

10

15

20

25

Individual HRTF

Unsigned azimuth error (degrees)

Static

Tracked

Generic HRTF

Begault, D. R., et al., “Direct comparison……”

15

0

5

10

15

20

25

30

35

anechoic

early reflections

full auralization

Reverberation treatment

Unsigned elevation error (degrees)

referenced to eye level

FIGURE 4. Elevation: Main effect for reverberation. Mean values and standard error

bars shown.

0%

10%

20%

30%

40%

50%

60%

70%

80%

90%

100%

head-tracked

static

Front-back & back-front reversed stimuli

(percent total)

FIGURE 5. Reversals: main effect for head tracking. Mean values and standard

error bars shown.

Begault, D. R., et al., “Direct comparison……”

16

0%

10%

20%

30%

40%

50%

60%

70%

80%

90%

100%

anechoic

early reflections

full auralization

Reverberation treatment

Percentage externalized judgments

FIGURE 6. Externalized judgments: main effect for reverberation. Mean values and

standard error bars shown.

Wyszukiwarka

Podobne podstrony:

Interruption of the blood supply of femoral head an experimental study on the pathogenesis of Legg C

A Comparison of the Fight Scene in?t 3 of Shakespeare's Pl (2)

Comparison of the Russians and Bosnians

A Comparison of the Status of Women in Classical Athens and E

Comparison of the U S Japan and German British Trade Rivalr

Femoral head vascularisation in Legg Calvé Perthes disease comparison of dynamic gadolinium enhanced

The comparison of two different forms of?vertisement

Alta J LaDage Occult Psychology, A Comparison of Jungian Psychology and the Modern Qabalah

12 Angry Men Comparison of the Movie and Play

Interruption of the blood supply of femoral head an experimental study on the pathogenesis of Legg C

Assessment of Borderline Pathology Using the Inventory of Interpersonal Problems Circumplex Scales (

the comparison of superiority practice key

Gade, Lisa, Lynge, Rindel Roman Theatre Acoustics; Comparison of acoustic measurement and simulatio

The comparison of two differnt translation of Oscar Wilde

Tomas The Catalan process for the direct production of malleable iron (1999)

Comparision of expression systems in the yeast

Rahmani, Lavasani (2011) The comparison of sensation seeking and five big factors of personality bet

więcej podobnych podstron